Harnessing tools and technologies like machine learning and AI are predicated on one crucial factor: clean, accessible data. It’s the lifeblood that fuels the potential of these capabilities. Often thought of as new or cutting edge, many of these technologies have actually been around a good while (AI is over 50 years old!).

Consider this dynamic analogous to the state of autonomous vehicles. The technology and software, like AI, has been available and ready to be harnessed. However, our road infrastructure, the equivalent of enterprise data, often leaves much to be desired (if not in shambles!). The quality of the infrastructure is thus hindering the full utilization of available technologies. Regardless, tapping the right skillsets and building intentional processes are now easier and more cost-effective than they’ve ever been for businesses to leverage their data and tap top-of-mind technologies.

Recently, we hosted an AI roundtable event alongside a technology executive from NetSuite, where the concept of the ‘data gap’ for emerging and midmarket businesses was discussed in detail. With only 6-8% of companies leveraging AI in their finance and accounting functions, one of the big culprits holding back adoption is inadequate data infrastructure.

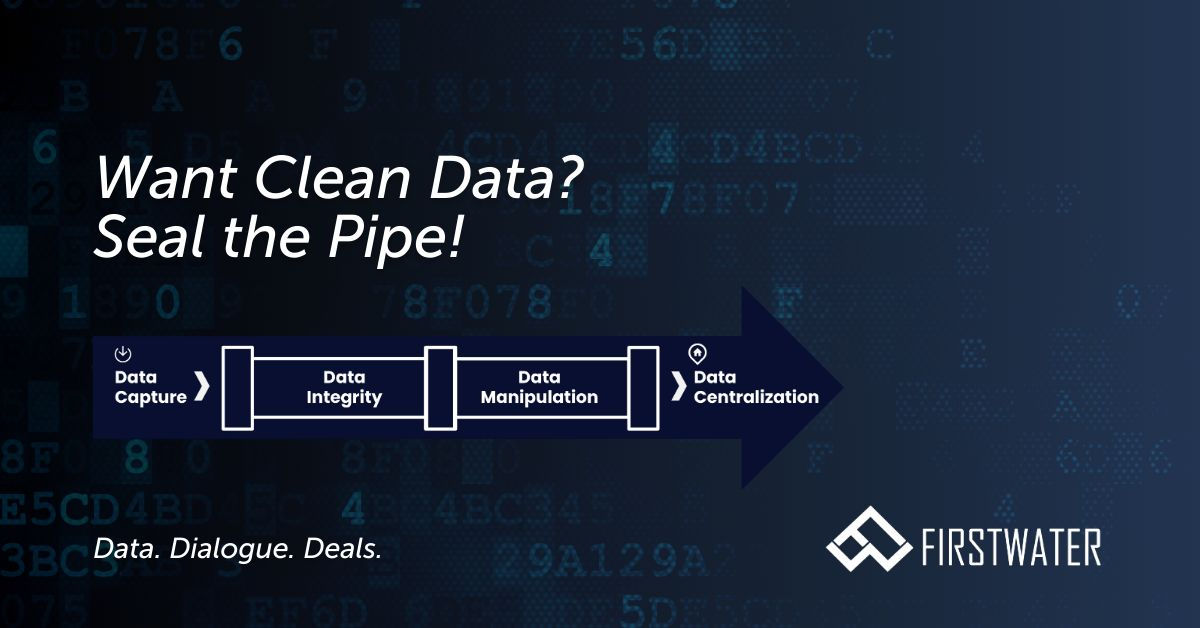

We discussed the first meaningful steps towards deploying AI within the finance function and beyond. To illustrate this, we introduced the analogy of a data pipe—a conduit through which data flows from capture (the “in” end) to a centralized repository (the “out” end). Preventing leakage in each segment of the pipe (“sealing the pipe”) is crucial for creating a data environment optimized for reporting, forecasting, and the adoption of new technologies.

Let’s take a journey through the various sections of this data pipe:

- Data Capture: While ‘garbage in, garbage out’ is a well-known idiom, it is also clear that nothing in is nothing out. If information is important enough, it must be captured through any means available, even if that means manual logging. Identifying and rectifying gaps in critical data capture is the first step toward ensuring comprehensive data flow through the pipe.

- Data Integrity: As data progresses through the pipe, it encounters its first gate: the data integrity checkpoint that represents the controls, exception identification, and corrections for any omissions or non-validated field entries. We call this the “data value checkpoint.” The success of the integrity checkpoint is often determined by steps taken prior to the point of capture, with items like entry requirements (“missing field, do not advance”) and data validation that ensures only qualified entries are made (“how many n’s in banananas?”). Leakage at this stage can compromise the value of entire datasets.

- Data Manipulation or ETL (Extract-Transform-Load): With complete data, it’s now time to shape and move. This checkpoint includes drawing from other datasets, including any joins or merges with central sources of truth (CSTs), to link, create, and unify master datasets. This is where datasets transform (shape) into convenient formats for use. It’s particularly important and useful when disparate data of a similar type needs to be unified. For example, a company running on NetSuite who acquires another business on QuickBooks, and ledger data needs to be unified across the organization prior to full system integration. We refer to this as the “data efficiency checkpoint.”

- Data Centralization: The data is ready, now it needs a home. For many organizations, this may be easy or simple, with an ERP or existing enterprise data warehouse (EDW) being the natural end point for the data pipe. However, many small, emerging, or even mid-market businesses may not have a single end point, either not having established an ERP/EDW or having fragmented data with multiple end points. For these companies, the concept of a relational database or data warehouse may not yet be an option, particularly in the short term. However, central locations can still be established, even if just through smart file organization and process design, done in a way that datasets can be tapped by those who need them on a recurring, automated basis. Centralization in this sense is a function of process and discipline!

Achieving seamless data flow from capture to the endpoint is essential for organizations seeking to harness data for insights, forecasting, and decision-making. As technologies such as AI and ML continue to advance, clean data emerges as a fundamental prerequisite. Finance leaders must seal the data pipe to put their organization in the best position to take advantage, not only for what is coming, but what is already readily available!

————–

First Water Finance (FWF) is a finance solutions platform supporting finance leaders, business owners, and capital partners through FP&A, Corporate Finance, and Community. FWF has supported over 100 management teams and sponsors, concentrated in emerging and mid-market enterprises, professionalizing and accelerating the finance function in pursuit of growth, acquisition, and/or sale objectives.